Industry Insight

Magical Moments in AI Products

Why wow moments fade, what endures, and how to build products that continue to feel like magic

–

Over the last three years, as AI took the tech world by storm, companies and individuals tried out countless new products. New experimental budgets opened up, costs didn’t matter, and we were patient with mistakes. We were just happy to be in the presence of magic.

But now, heading into 2026, ROI is the focal point for AI solutions. Enterprises need to see real outcomes to continue investing at the magnitudes they have been. That said, what is ROI really? In theory it’s a financial calculation, but in practice, it's a psychological one:

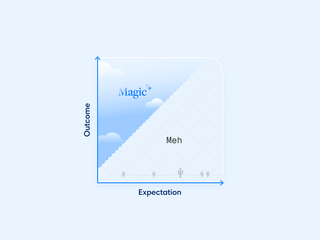

Expectation vs. Outcome

The "Investment" is the time, cost, and energy a user puts into a tool, ultimately shaping their expectations. The "Return" is the outcome they experience.

In 2023, seeing a chatbot write a mediocre poem felt like magic because our expectations were at zero. Today, our expectations have skyrocketed. If a tool doesn’t anticipate our next move, it feels like a chore. For product building, utility is no longer enough. To win, AI products have to deliver Magical Moments – outcomes that exceed the expectation line so dramatically that they reset the user’s mental model.

The challenge for builders is to sustain that gap, delivering a surplus of value before the line of expectation catches up again.

What Actually Counts as "Magical"?

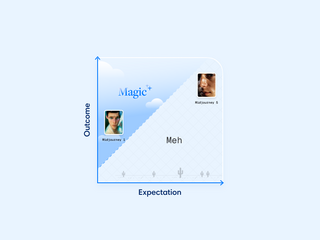

When the first AI image generators like Midjourney appeared in 2022, the results felt magical, despite strange and imperfect images. Then, as each model version improved, the gap between expectations and output closed. By the time Midjourney V5 arrived, the images were 100x better, but the audience was no longer wowed. Instead, users trolled outputs for inconsistencies and flaws – such as misplaced hands.

While the technology became more impressive, user expectations had rocketed. Each version reset the baseline of what’s possible, and the feeling of magic faded.

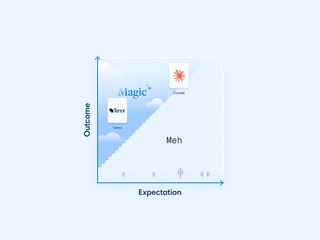

In categories like healthcare administration (Tennr) or IT helpdesk (Serval), the baseline is often manual labor performing decades-old processes. With expectations on the floor, straightforward AI automations that work reliably feel like magic.

Contrast this with users who live in AI all day, such as developers. Their expectations are sky high. To be "magical," you can’t just suggest the next line of code; you have to index their entire codebase and anticipate architectural updates. Claude Code wins because it exceeds such high expectations with even higher performance. Opus 4.5 and 4.6 raised the bar further, making Opus 4.1's previous magic the new floor.

This has 2 implications for building magical product moments:

- Magic is relative

- Magic is temporary

Designing for Magic

Since Magic is relative (to the target user's current baseline) and Magic is temporary (decaying as soon as the novelty becomes the norm), then product design becomes a race against a shifting expectation line. To win this race, you cannot rely on incrementalism. Instead, you must deliver a new outcome that is transformative compared to the status quo. There are two primary levers for achieving this.

- Redefining the Task (The "What")

You don’t deliver magic by making the old thing 30% faster, but rather by fundamentally redefining what that thing is. This creates irreversibility, moving the user from "slightly more efficient" to "entirely different."

Gamma exemplifies this. Instead of speeding up slide creation, the product made us question whether decks need to be built slide-by-slide at all. By removing the "slide-by-slide" grind, it changed what users expect a presentation tool to do. This redefines the task from assembling pages to shaping ideas.

- Transforming the Interaction (The "How")

Even when the task remains the same, changing how users interact with the system can reset expectations entirely.

The way we can interact with AI and agents sits on a spectrum – from chat, where the user initiates, to proactive suggestions, to invisible automation where work happens in the background. Moving along that spectrum lets newer AI products avoid comparison with legacy tools.

OpenClaw’s (aka Moltbot aka Clawdbot) viral breakout, comes from doing exactly that. By moving the assistant out of the browser and into everyday messaging – and by acting without being prompted – it resets what users expect an assistant to do. For many, it’s the first time AI feels truly autonomous.

Another example is how Boardy takes a conversational approach to relationship management, removing any dashboard interface. By removing the expected interface, Boardy avoids comparison to LinkedIn or traditional networking tools entirely. This absence of a reference point allows the product to feel magical because the interaction model is transformed. It’s not surprising that AI-native B2B CRMs are moving in this direction too (for example, Day AI, Monaco, Rox, among many others).

This is the frontier: reimagining what the workflow does, and how users experience it. The test is how quickly the recalibration settles in, and how quickly anything less begins to feel outdated.

How to Measure Magic

Given magic is a psychological equation, it’s easy to misread. Some of the strongest early signals in AI products – such as viral demos, social buzz, flashy outputs – turn out to be weak predictors of real magic. We think there are more reliable signals that you've created something truly magical.

Time-to-Transformation (Not Time-to-Value)

Traditional SaaS products often tout "Time-to-Value"; this made sense when software was making an existing workflow easier.

But AI products should track something different: Time-to-Transformation. This measures how long it takes a user to do something they literally could not do before, or accomplish a task that would have been impractical given their constraints. For OpenClaw, the Time-to-Transformation is a few hours: to set it up and see it perform an unexpectedly proactive or comprehensive step within a task.

The Irreversibility Ratio

On a similar vein, whereas applications typically track "daily active users”, AI products should track "irreversible" users.

How do you measure this? Look for:

- Resurrection rate: Users who lapse and then return specifically because they realized they need the product.

- Vocal advocates: Unprompted posts or reviews that say "I can't work without this".

- Workflow replacement: Are users shutting down the old tool?

The irreversibility ratio is the percentage of your active users who've replaced their old workflow, not just supplemented it. When this number is high, you've built something magical. In the case of Tennr, irreversibility is achieved when customers redeploy team members to other functions because Tennr has completely replaced the old workflows around wrangling documentation.

Magic Moats = Velocity + Taste

If magic is about exceeding expectations, and expectations keep rising, then magic on its own isn’t defensible. The advantage comes from how quickly you can create the next reset, and how accurately you can anticipate where the baseline is heading.

Magic decays. It feels like the half-life of "wow" in AI products right now is about a quarter long. So companies win by out-shipping the decay curve.

ElevenLabs went from basic text-to-speech and voice cloning to real-time multilingual conversational agents. Competitors are still trying to catch up to their 2023-quality cloning while ElevenLabs is shipping new layers of magic on top of that foundation. Companies like ElevenLabs know that magic will decay, and their moat is derived from the organizational capability to keep shipping the next thing before users catch up.

OpenClaw’s breakout will be an interesting test. What feels impressive now will be normalised fast, especially once more accessible products like ChatGPT or even Siri adopt similar patterns. OpenClaw’s longevity will be determined by its open source community and whether it can rapidly iterate.

Speed alone isn’t enough, though. As baselines shift, the harder question is where to focus next. That’s a matter of product taste, not data. The strongest teams anticipate what users will expect before they can articulate it.

Cursor understood that after basic autocomplete, developers would want full codebase context, and Abridge recognised that once patient conversations were captured in clinical notes, there was additional value in using those notes to layer on revenue cycle intelligence.

The Magic Treadmill

As we study emerging trends and categories, we’re seeing a clear filter emerge. Incremental AI features – a “summarize” button here, a “rewrite” toggle there – are no longer magical. They don’t change habits; they only supplement legacy interfaces.

The products that win are part of a broader irreversibility shift because the software permanently changes user behavior. When a clinic adopts Tennr, it stops needing humans to behave like machines just to manage paperwork. When developers use Claude Code, their baseline for what “starting from scratch” means is redefined, and they spend more time thinking in outcomes than in syntax.

Once behavior changes, expectations follow. To stay relevant in this market, you have to build to anticipate the next baseline. The reason the "Magic" fades is actually a beautiful thing: it means our expectations have evolved. What was a "miracle" yesterday is just the way life works today.

–

Thanks to my partner, Shravan, for his mildly helpful thoughts and feedback during drafting.